Student to deliver invited talk on future directions in deep reinforcement learning

CSE PhD student Wilka Carvalho won a competition to deliver an opinion talk at the Deep Reinforcement Learning (RL) Workshop at the Conference on Neural Information Processing Systems (NeurIPS 2021). His talk explores the opportunities available in the field of deep RL to design agents that can recombine, or compose, their knowledge to generalize to new settings.

The Deep RL Workshop brings together researchers working at the intersection of deep learning and reinforcement learning in an effort to help interested researchers outside of the field gain a high-level view about the current state of the art and potential directions for future contributions.

Carvalho argues that the ability to discover what he refers to as composable primitive representations will play a key role in enabling deep RL agents to become helpful companions in society. Primitive representations are the basic building blocks of an agent or person’s knowledge of their environment. Composability means that these building blocks can be combined to enable us to perform new tasks in novel settings. For example, people can compose their knowledge of operating electronics with their knowledge of traversing terrains to use cellphones while on a hike, even if they’ve never done this before.

Carvalho argues that these composable primitive representations can allow an agent to build up a model of its observations over time and solve a variety of practical problems. This ability can then allow them to generalize features across different complex settings.

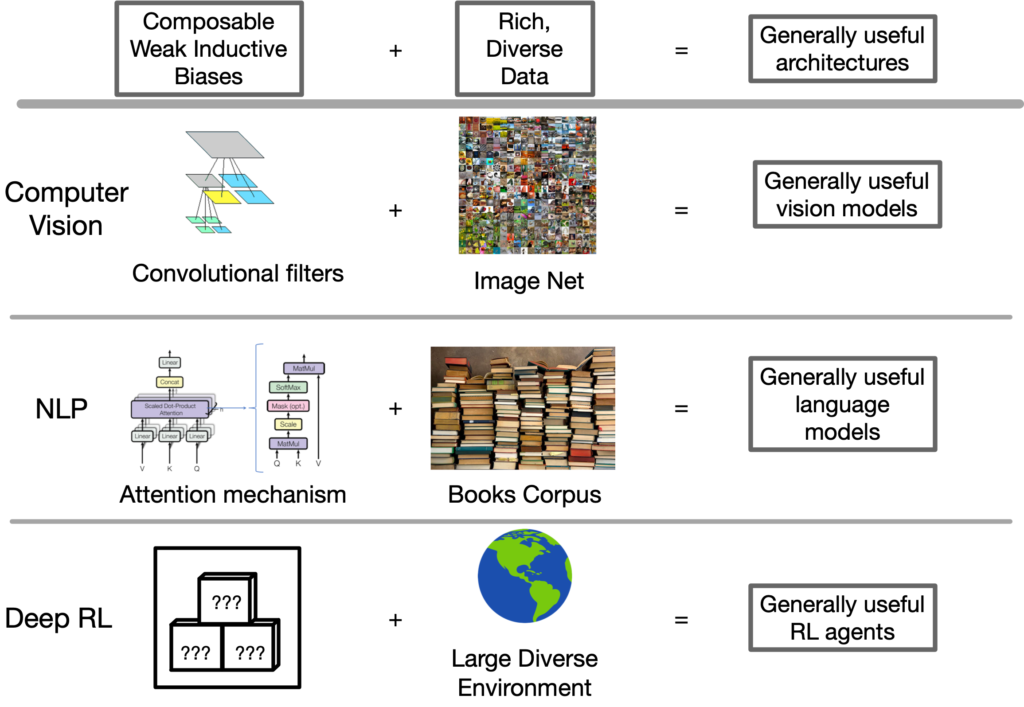

The key to building algorithms that can discover these primitives, he says, is weak inductive biases – meaning that a learning algorithm makes few assumptions about input data when predicting outputs. This approach turns out to scale extremely well with deep RL as the size of the learning data grows. Existing examples of models that can discover composable primitives, like convolutional neural networks and transformers, weren’t designed for deep RL but have already demonstrated their usefulness to the field. Carvalho feels deep RL can learn from their usefulness, particularly as they’ve been trained on massive amounts of image and language data.

“Perhaps, this is what deep RL is missing,” Carvalho says, “a large, rich environment where an agent has the ability to learn from massive amounts of diverse experiences in the world.”

Carvalho delivers his talk, titled “Weak Inductive Biases for Discovering Composable Representations,” on December 13.

MENU

MENU